Engineers need to stop asking for "simple" changes

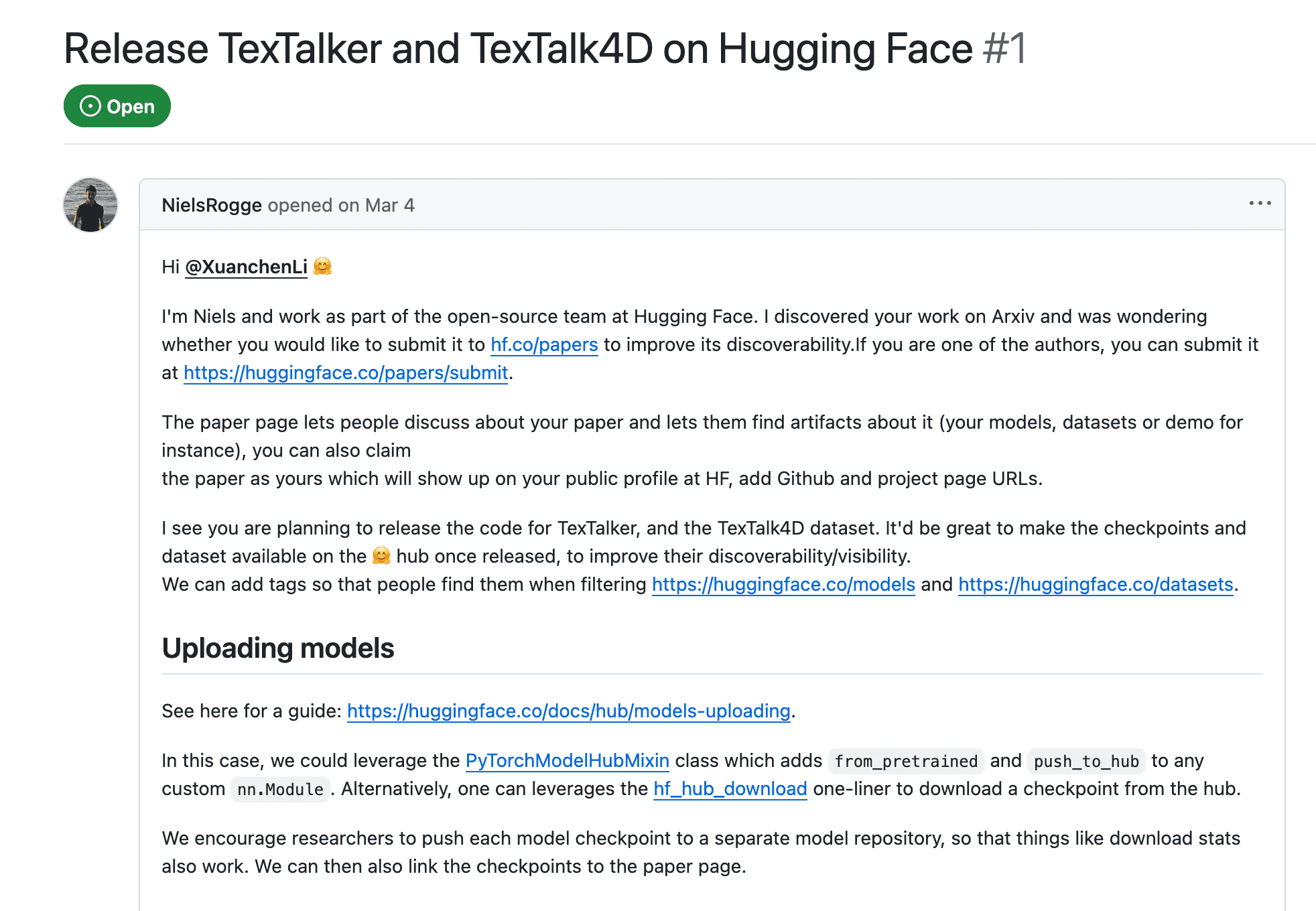

I was looking at a GitHub issue where a Hugging Face Developer Relations Engineer was asking a researcher to integrate their AI models into the HF platform. The request was polite and professional: "It'd be great to make the checkpoints and dataset available on the 🤗 hub once released, to improve their discoverability/visibility. Here's a guide on how to upload models."

This is exactly the kind of request that happens thousands of times across open source. A company wants people to integrate with their tooling or platform, they provide documentation and ask maintainers to follow it. Seems reasonable, right?

But when I actually read the Hugging Face guide they linked, something clicked. This guide is terrible for making code changes, and that reveals a bigger problem with how we make "simple code change" requests.

Linking a guide isn't as helpful as you think

That Hugging Face guide spends paragraphs explaining download metrics and organization pages. It talks about TensorBoard integration and model cards. It's optimized for selling you on the platform's capabilities: "Models on the Hub are Git-based repositories, which give you versioning, branches, discoverability and sharing features!"

The guide works well for explaining what Hugging Face offers. But if you're a researcher sitting there with a PyTorch model wondering "what exactly do I need to change in my code?" - that's not what this guide was built for. It doesn't answer the questions that actually matter for implementation:

- What files do I need to modify?

- What imports do I add where?

- How do I verify this actually worked?

- What if my model doesn't fit the standard pattern?

- How long will each step take?

If Hugging Face wants to increase the probability that researchers actually upload their implementations, they shouldn't be adding the extra cognitive load of understanding what Hugging Face is about and all the ways you can use it. They could do more work in this scenario by writing guidance that's optimized specifically for making the code changes, not for explaining platform features.

The double dip problem

I want to acknowledge that Hugging Face is fully justified in explaining their platform features. After all, this DevRel person is trying to sell researchers on using Hugging Face. So asking them to read about all the benefits makes sense from one point of view.

But in this specific scenario, you have to consider that Hugging Face is actually going to benefit a lot from researchers putting their code on the platform. So what they're doing right now is trying to double dip - though I don't think this is intentional.

They're burying the technical details you need to make the change inside marketing exposition. They want the researcher to do work that's going to improve Hugging Face's SEO and platform adoption, while also consuming sales pitches about collaboration features.

I think we're just so used to "this is what guides look like" that developer relations people aren't even thinking about the next step: they could be writing prompts instead. It's possible to single dip - to make integration truly frictionless while still getting the platform benefits. Providing a prompt for a coding agent is just better for everyone involved.

What an AI-first approach would look like

If you think about it, what's the minimum information required to make these changes? That's exactly what you'd put in a prompt for a coding agent.

The Hugging Face developer relations engineer could write integration-specific guidance:

Look for the main model class in this repository.

It's probably called [Model/Network/Generator] and inherits from nn.Module.

Add PyTorchModelHubMixin as a second parent class.

Import it from huggingface_hub.

If the __init__ method takes non-JSON-serializable arguments,

we'll need to modify them - ask the maintainer about this specific case.

Add these metadata fields based on the paper: [repo_url, pipeline_tag, license].

Create a test script that verifies push_to_hub works.

If there are any choices you can't make automatically,

ask the maintainer these specific questions: [list].

The beautiful thing is Hugging Face could test this prompt on dozens of different research projects. Once they've refined it to handle the common patterns, they can make it easy for new projects to just copy and paste the prompt and run it.

That approach encodes the actual decision-making process instead of explaining platform features. The DevRel person has seen this integration dozens of times - they should distill that knowledge into actionable form.

The broader pattern

This applies to all those "simple" integration requests:

Instead of: "Can you add a Dockerfile? Here's Docker documentation."

Write a prompt: "Create a Dockerfile for this Python app. I need to run this on ARM64 Linux. I want to use environment variables to control the listening addresss and the database connection. If the repository has requirements.txt, mentioning a python version use that. If not, scan imports and make a best guess. Ask the maintainer about Python version hard requirements."

Instead of: "Could you integrate our logging framework? Here's our guide."

Write a prompt: "Add our logging framework to this codebase. Look for existing logging setup and replace it. If there's no existing setup, add it to the main application entry point. Configure for structured format. If the app already uses structured logging, adapt to that pattern."

Prompts can handle complexity

The beautiful thing is prompts can be interactive and comprehensive. You can write:

"Ask the maintainer what authentication method they prefer: API keys, OAuth, or JWT. Based on their answer, configure accordingly. If they choose OAuth, ask for redirect URLs. If they're not sure, explain the tradeoffs and recommend JWT for most use cases."

You can encode simple dialogue trees. You can make a prompt that's 20 pages long and full of example code. The goal is to skip some parts of back-and-forth discussions.

The AI threshold as a quality test

Here's what AI has made obvious: if your request is simple enough to ask of someone else, you should be able to write a prompt that accomplishes it.

Try feeding that Hugging Face guide to Claude and asking it to integrate a random PyTorch repository. Will itfail to capture the nuances and make something up? It might, because the guide doesn't contain sufficient technical detail about the implications of different options. You might get code that runs, but there's a good chance it'll have defects that trace back to missing information in the guide.

The Hugging Face engineer could include all the information about these nuances. Then maintainers could be pretty confident that the prompt they're using will work the first time. And if you can't write guidance with that level of technical completeness, that's a signal:

- You don't actually understand what you're asking for

- The change is more complex than you thought

- You're being lazy and expecting someone else to do the thinking

All three are reasons to reconsider making the request.

What this changes for developer relations

Developer relations people are in a unique position. They see the same integration patterns repeatedly. Instead of writing guides that explain platform features, they should write prompts that developers can paste into their coding agents.

When requesters have to write working prompts, they're forced to:

- Think through their actual requirements upfront

- Understand the implementation details they're asking for

- Encode decision trees instead of expecting back-and-forth

- Test whether their guidance actually works

The new standard

We're moving toward a world where requests look like:

"Here's a prompt that adds the Hugging Face integration I need - could you run it and let me know if you want any adjustments?"

Instead of:

"Could you integrate with Hugging Face? Here's our documentation."

The people making requests do the cognitive work of understanding what they want. The maintainers review and approve. AI handles the mechanical translation from requirements to code.

Summary

- Integration guides are written to sell platforms, not help with code changes

- Requesters should write prompts with specific constraints, not link to documentation

- Developer relations should provide working prompts, not tutorial guides

- If you can't write a working prompt, reconsider your request

- This forces requesters to understand what they're actually asking for

Every time you're about to ask for a "simple" integration, ask yourself: "Could I write a prompt that specifies exactly what I want?"

If you can't, you're probably asking someone else to do thinking that you should be doing yourself.